Chapter 3 Categorical predictors

The linear model where the predictor variables are categorical - so called factors - has come to be known as Analysis of Variance (ANOVA). In this chapter we first introduce ANOVA in a classic sense before showing how this is essentially a special case of the linear model.

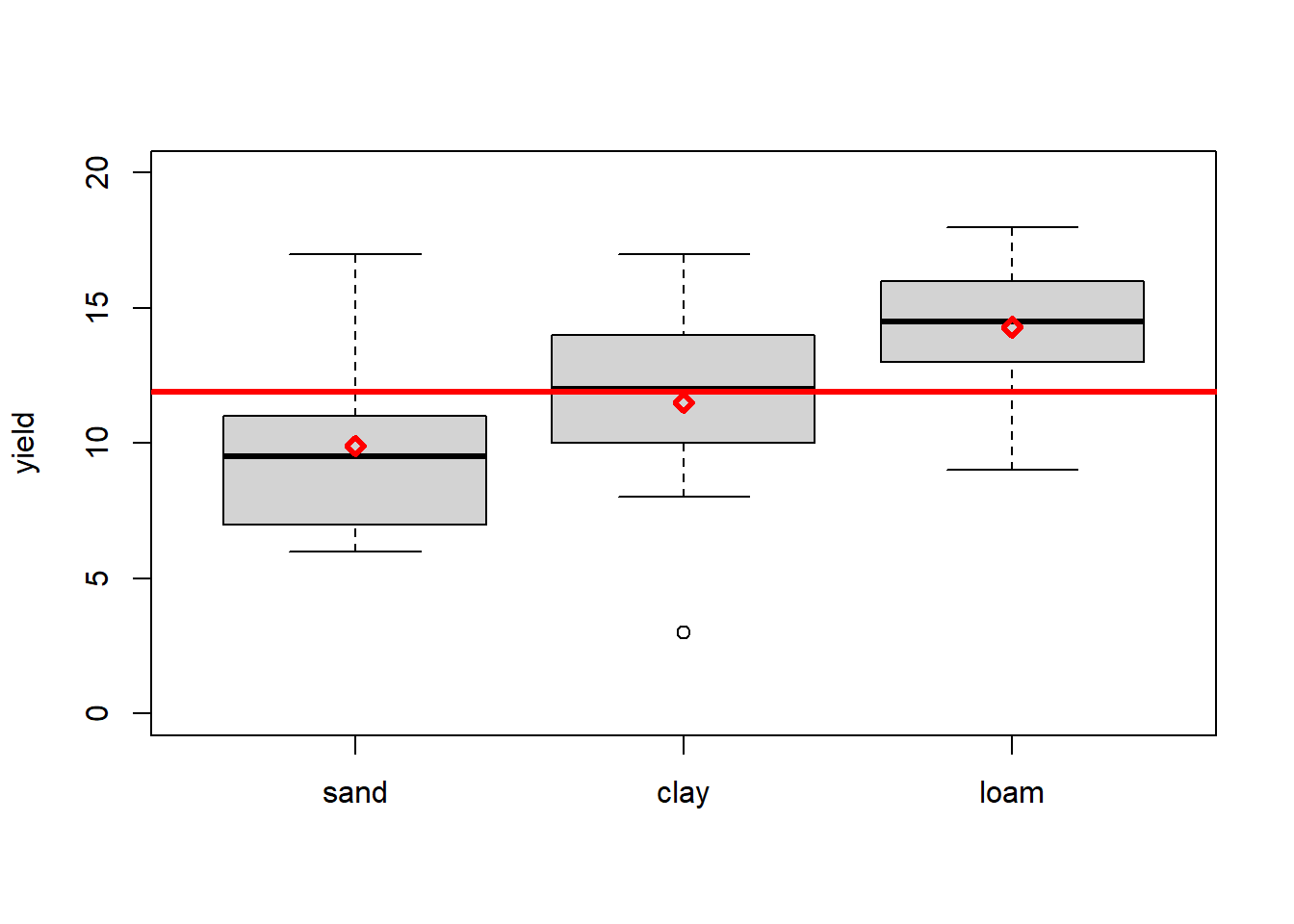

As an example we will look at a dataset of crop yields for different soil types from Crawley (2012) - see Figure 3.1 - asking the question: Does soil type significantly affect crop yield?

# load yields data

yields <- read.table("data/yields.txt",header=T)

# means per soil type

mu_j <- sapply(list(yields$sand,yields$clay,yields$loam),mean)

# overall mean

mu <- mean(mu_j)# boxplots of yield per soil type

boxplot(yields, ylim = c(0, 20), ylab = "yield")

abline(h = mu, lwd = 3, col = "red")

points(seq(1,3), mu_j, pch = 23, lwd = 3, col = "red")

Figure 3.1: Boxplots of yield per soil type (the factor) with individual and overall means marked in red. Data from: Crawley (2012)

The factor in this example is “soil type”. The categories of a factor are called levels, groups or treatments depending on the experimental setting and the research field. In the yields example the factor levels are “sand”, “clay” and “loam”. The parameters of an ANOVA are called effects - more below. ANOVA with one factor is called one-way ANOVA.

The typical question answered by ANOVA is: Are means across factor levels significantly different? This is tested by comparing the variation between levels (i.e. the overlap or not between boxplots in Figure 3.1) to the variation within levels (i.e. the size of the individual boxplots). In the case of one factor with two levels, ANOVA is equivalent to the familiar t-test.

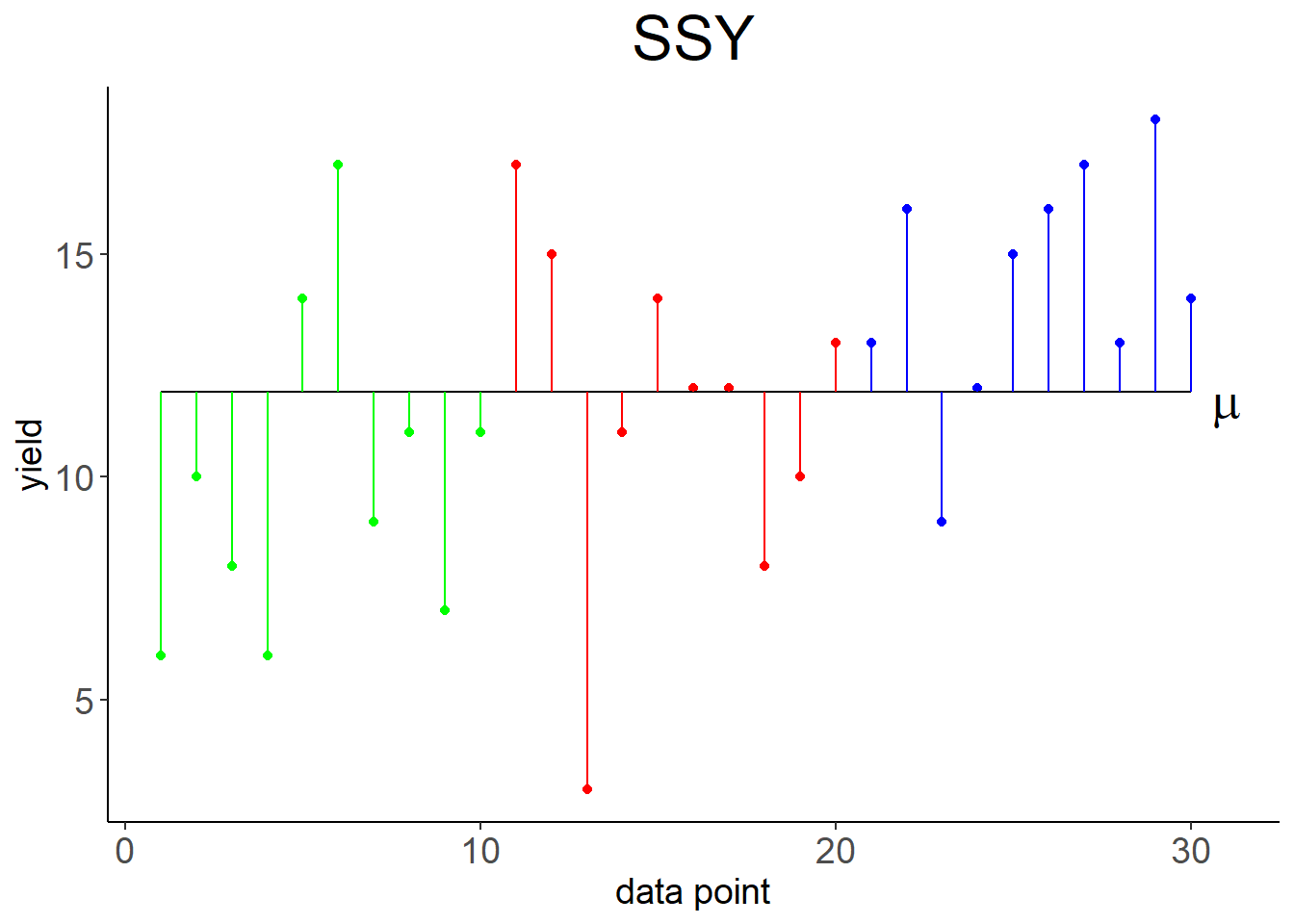

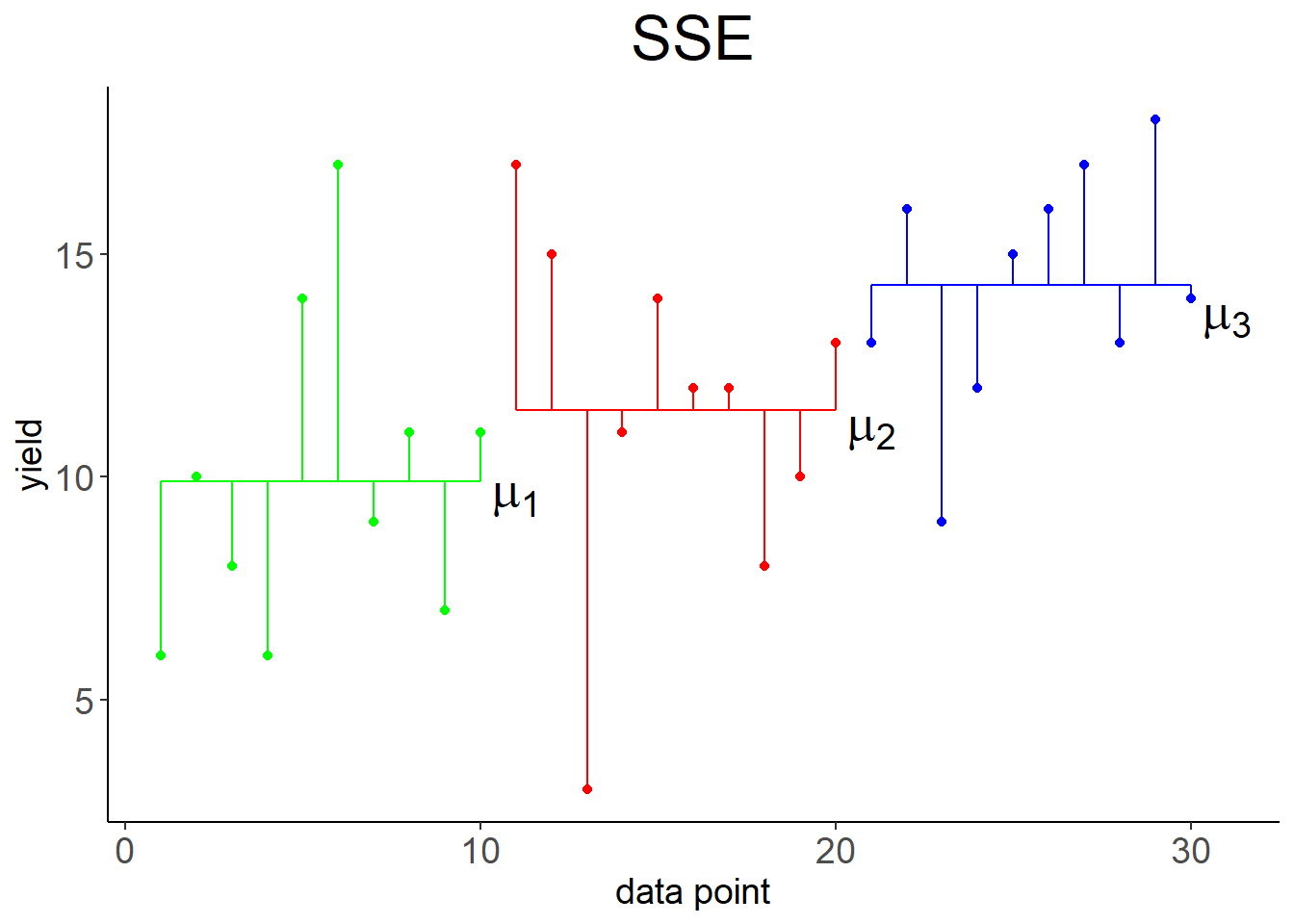

The Linear effects model formulation for ANOVA is: \[\begin{equation} y_{ji}=\mu+a_j+\epsilon_{ji} \tag{3.1} \end{equation}\] With \(j=1, 2, \ldots, k\) indexing the levels (e.g. sand, clay, loam), \(i=1, 2, \ldots, n_j\) indexing the data points at level \(j\), \(y_{ji}\) being the \(i\)th observation of the response variable (e.g. yield) at level \(j\), \(\mu\) being the overall mean of the response variable (Figure 3.2, left), \(a_j=\mu_j-\mu\) being the effect of level \(j\), and \(\epsilon_{ji}\) being the residual error. \(\mu_j\) is the mean of the response variable at level \(j\). So effectively the response variable for each level is predicted by its mean plus random noise (Figure 3.2, right): \[\begin{equation} y_{ji}=\mu_j+\epsilon_{ji} \tag{3.2} \end{equation}\]

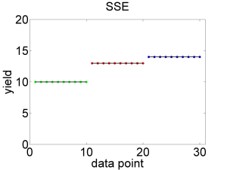

Figure 3.2: Left: Variation of data points around overall mean \(\mu\), summarised by \(SSY\). Right: Variation of data points around individual means \(\mu_1, \mu_2, \mu_3\), summarised by \(SSE\).

To see how the ANOVA model is essentially a linear model we look at what is called dummy coding of the categorical predictor (here soil type). This is the original data table:

## sand clay loam

## 1 6 17 13

## 2 10 15 16

## 3 8 3 9

## 4 6 11 12

## 5 14 14 15

## 6 17 12 16

## 7 9 12 17

## 8 11 8 13

## 9 7 10 18

## 10 11 13 14Now we expand this to a long table with dummy or indicator variables “clay” and “loam”:

yields2 <- data.frame(yield = c(yields$sand, yields$clay, yields$loam),

clay = c(rep(0,10), rep(1,10), rep(0,10)),

loam = c(rep(0,10), rep(0,10), rep(1,10)))

yields2## yield clay loam

## 1 6 0 0

## 2 10 0 0

## 3 8 0 0

## 4 6 0 0

## 5 14 0 0

## 6 17 0 0

## 7 9 0 0

## 8 11 0 0

## 9 7 0 0

## 10 11 0 0

## 11 17 1 0

## 12 15 1 0

## 13 3 1 0

## 14 11 1 0

## 15 14 1 0

## 16 12 1 0

## 17 12 1 0

## 18 8 1 0

## 19 10 1 0

## 20 13 1 0

## 21 13 0 1

## 22 16 0 1

## 23 9 0 1

## 24 12 0 1

## 25 15 0 1

## 26 16 0 1

## 27 17 0 1

## 28 13 0 1

## 29 18 0 1

## 30 14 0 1When “clay” is 1 and “loam” is 0 then we are in the clay category, when “clay” is 0 and “loam” is 1 then we are in the loam category, and if both are 0 we are in the “sand” category. This can be visualised in 3D (you can rotate this cube using the cursor):

Now we can use the familiar linear model, but with two predictors, to represent ANOVA: \[\begin{equation} y_i=\beta_0+\beta_1\cdot x_{i1}+\beta_2\cdot x_{i2}+\epsilon_i \tag{3.3} \end{equation}\] In this formulation, \(x_1\) is the indicator variable “clay” and \(x_2\) is the indicator variable “loam”. Both can only take values of 0 and 1, they are binary. Hence, looking at the 3D-plot above, when both \(x_1\) and \(x_2\) are 0 then we are in the front corner of the plot where we see the yield data for “sand”. The model is then reduced to \(y_i=\beta_0+\epsilon_i\), with \(\beta_0\) being the mean yield for “sand”.

When \(x_1=0\) and \(x_2=1\) then we are in the left corner of the 3D-plot where we see the “loam” yields. The model is then \(y_i=\beta_0+\beta_2+\epsilon_i\) with \(\beta_0+\beta_2\) being the mean yield for “loam”.

Finally, when \(x_1=1\) and \(x_2=0\) then we are in the right corner of the 3D-plot where we see the “clay” yields. The model is then \(y_i=\beta_0+\beta_1+\epsilon_i\) with \(\beta_0+\beta_1\) being the mean yield for “clay”. \(\beta_1\) and \(\beta_2\) are thus increments added to what’s called the base category (in this case “sand”) to arrive at the new means for “clay” and “loam”, respectively. This is symbolised by the red lines in the 3D-plot. We are basically modelling unique means for each factor level; Equations (3.3) and (3.2) are equivalent.

If you remember, we already looked at an ANOVA table under linear regression (chapter 2). Let’s now see how this is essentially similar to the actual ANOVA, while noting some key differences. Table 3.1 shows the one-way ANOVA table.

| Source | Sum of squares |

Degrees of freedom \((df)\) | Mean squares | F statistic \(\left(F_s\right)\) | \(\Pr\left(Z\geq F_s\right)\) |

|---|---|---|---|---|---|

| Level | \(SSA=\\SSY-SSE\) | \(k-1\) | \(\frac{SSA}{df_{SSA}}\) | \(\frac{\frac{SSA}{df_{SSA}}}{s^2}\) | \(1-F\left(F_s,1,n-k\right)\) |

| Error | \(SSE\) | \(n-k\) | \(\frac{SSE}{df_{SSE}}=s^2\) | ||

| Total | \(SSY\) | \(n-1\) |

Compare this to Table 2.3. The essential differences are: The explained variation is now labeled “level” with the notation \(SSA\), instead of “regression” and \(SSR\). The number of parameters subtracted from the data degrees of freedom is now \(k\), the number of levels, instead of 2. The error variation is now calculated as \(SSE=\sum_{j=1}^{k}\sum_{i=1}^{n_j}\left(y_{ji}-\bar y_j\right)^2\) (Figure 3.2, right), instead of \(SSE=\sum_{i=1}^{n}\left(y_i-\left(\beta_0+\beta_1\cdot x_i\right)\right)^2\). The total variation, however, is the same: \(SSY=\sum_{j=1}^{k}\sum_{i=1}^{n_j}\left(y_{ji}-\bar y\right)^2=\sum_{i=1}^{n}\left(y_i-\bar y\right)^2\) (Figure 3.2, left).

For comparison, a perfect model fit would look like Figure 3.3.

Figure 3.3: Hypothetical perfect fit of an ANOVA model. Left: Variation of data points around overall mean, summarised by \(SSY\). Right: Variation of data points around individual means, summarised by \(SSE\).

When we estimate the individual means of the factor levels, they come with a standard error just like the linear regression parameters. This is: \[\begin{equation} s_{\mu_j}=\sqrt{\frac{s^2}{n_j}} \tag{3.4} \end{equation}\] With \(n_j\) being 10 in our example, which does not need to be the same across the factor levels, and \(s^2=\frac{SSE}{n-k}\), the error variance from the ANOVA table (Table 3.1). Let’s calculate the various means, effect sizes and corresponding errors again:

# define constants

n <- 30

k <- 3

n_j <- 10

# means per soil type

mu_j <- sapply(list(yields$sand,yields$clay,yields$loam),mean)

mu_j## [1] 9.9 11.5 14.3## [1] 11.9## [1] -2.0 -0.4 2.4# SSE

SSE <- sum(sapply(list(yields$sand,yields$clay,yields$loam),function (x) sum((x-mean(x))^2) ))

SSE## [1] 315.5## [1] 11.69# standard error of individual means

# here the same across factor levels as n_j is homogeneous

s_mu_j <- sqrt(s2/n_j)

s_mu_j## [1] 1.081Finally, what we are really interested in are the differences between factor level means \(\mu_j\) and whether they are statistically significant, i.e. large enough compared to the variation within each factor level. This is a t-test problem (comparing two means) for which we need the differences of means, let’s call them \(\Delta\mu_j\), and the standard errors of these differences: \[\begin{equation} s_{\Delta\mu_j}=\sqrt{\frac{2\cdot s^2}{n_j}} \tag{3.5} \end{equation}\]

The t-test statistic then is: \[\begin{equation} t_s=\frac{\Delta\mu_j}{s_{\Delta\mu_j}} \tag{3.6} \end{equation}\]

Compare the standard t-test where the test statistic is \(t_s=\frac{\bar x_1-\bar x_2}{\sqrt{\frac{s_1^2}{n_1}+\frac{s_2^2}{n_2}}}\). Since in this dataset we have equal sample sizes across levels and homogeneous variance (homoscedasticity) is a fundamental assumption of ANOVA (as it is of linear regression), the standard t-test statistic simplifies to Equation (3.6).

The corresponding p-value of the 2-sided test then is: \[\begin{equation} \Pr\left(|Z|\geq t_s\right)=2\cdot\left(1-t\left(t_s,n'-3\right)\right) \tag{3.7} \end{equation}\] With \(t\left(t_s,n'-3\right)\) being the value of the cumulative distribution function (cdf) of the t-distribution with parameter \(n'-3\) at the location \(t_s\), and \(n'=30\) in the yields example.3

Let’s calculate these t-tests “by hand” in R:

## [1] 1.6 4.4 2.8# standard error of differences

# here the same across factor levels as n_j is homogeneous

s_delta_mu_j <- sqrt(2*s2/n_j)

s_delta_mu_j## [1] 1.529## [1] 1.047 2.878 1.832## [1] 0.304557 0.007728 0.078070We conclude that the yields on sand and loam are just about significantly different at a conventional significance level of 0.01, with yields on sand being 4.4 units lower on average. The other yield differences are not statistically significant (compare Figure 3.1).

Finally, we show that we get the results of the ANOVA and bivariate t-tests with the lm() function:

# expand to a long variable "yield" and a index variable "soiltype"

yields_long <- data.frame(yield = c(yields$sand, yields$clay, yields$loam),

soiltype = as.factor(c(rep(1,10), rep(2,10), rep(3,10))))

# fit linear model using lm

yield_fit <- lm(yield ~ soiltype, data = yields_long)

summary(yield_fit)##

## Call:

## lm(formula = yield ~ soiltype, data = yields_long)

##

## Residuals:

## Min 1Q Median 3Q Max

## -8.5 -1.8 0.3 1.7 7.1

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 9.90 1.08 9.16 9e-10 ***

## soiltype2 1.60 1.53 1.05 0.3046

## soiltype3 4.40 1.53 2.88 0.0077 **

## ---

## Signif. codes:

## 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 3.42 on 27 degrees of freedom

## Multiple R-squared: 0.239, Adjusted R-squared: 0.183

## F-statistic: 4.24 on 2 and 27 DF, p-value: 0.025The intercept is the mean of the base category “sand”, \(\beta_0\) in Equation (3.3). The predictor “soiltype2” is the difference between “sand” and “clay”, \(\beta_1\) in Equation (3.3). The predictor “soiltype3” is the difference between “sand” and “loam”, \(\beta_2\) in Equation (3.3). Note the corresponding standard errors and t-test statistics that we calculated by hand above. This is a more elegant solution than doing bivariate t-tests.

An alternative setup using lm() is to estimate the three separate means instead of the mean of one base category and two differences from this. This is achieved through the notation 0 + ..., which suppresses the overall intercept. Effectively, this now is a linear model with three separate intercepts:

##

## Call:

## lm(formula = yield ~ 0 + soiltype, data = yields_long)

##

## Residuals:

## Min 1Q Median 3Q Max

## -8.5 -1.8 0.3 1.7 7.1

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## soiltype1 9.90 1.08 9.16 9.0e-10 ***

## soiltype2 11.50 1.08 10.64 3.7e-11 ***

## soiltype3 14.30 1.08 13.23 2.6e-13 ***

## ---

## Signif. codes:

## 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 3.42 on 27 degrees of freedom

## Multiple R-squared: 0.932, Adjusted R-squared: 0.925

## F-statistic: 124 on 3 and 27 DF, p-value: 6.68e-16From here, bivariate comparisons can proceed like we did by hand above.

References

Note that I am deviating here from Crawley (2012) who uses a t-distribution with \(n'-2\) degrees of freedom and \(n'=20\), reasoning that two means have been estimated each having 10 replicate measurements. In keeping with the following

lm()results, however, I reason that we have actually estimated three means together each having 10 replicates, i.e. using a total of 30 data points.↩︎